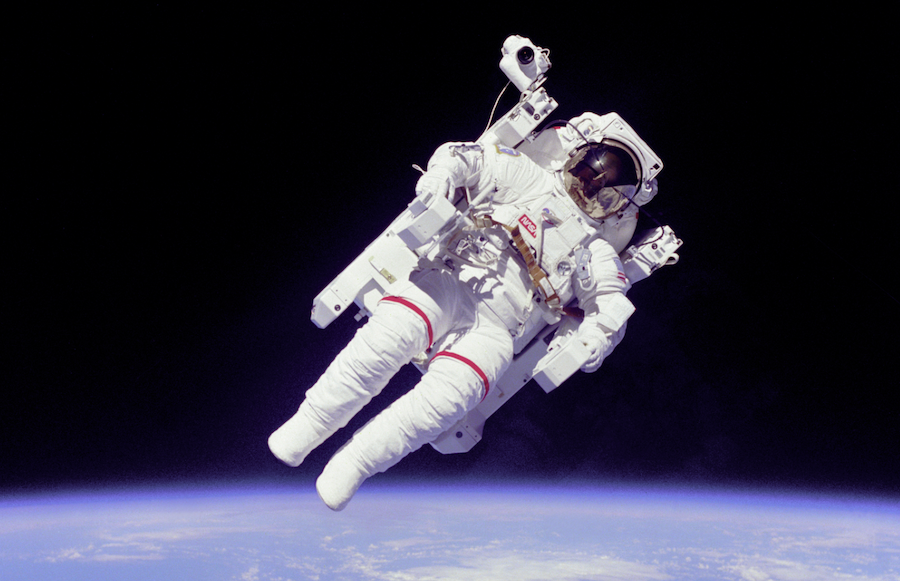

When times are hard, it often helps to zoom out for a moment—in search of a wider perspective, historical context, the forest full of trees…

Astronaut.io, an algorithmic YouTube-based project by Andrew Wong and James Thompson, offers a big picture that’s as restorative as it is odd:

Today, you are an Astronaut. You are floating in inner space 100 miles above the surface of Earth. You peer through your window and this is what you see.

If the stars look very different today, it’s because they’re human, though not the kind who are prone to attracting the paparazzi. Rather, Astronaut is populated by ordinary citizens, with occasional appearances by pets, wildlife, video game characters, and houses, both interior and exterior.

Launch Astronaut, and you will be bearing passive witness to a parade of uneventful, untitled home video excerpts.

The experience is the opposite of earthshaking.

And that is by design.

As Wong told Wired’s Liz Stinson:

There’s this metaphor of being on a train …you see things out the window and think, ‘Oh what is that?’ but it’s too late, it’s already gone by. Not letting someone go too deep is pretty important.

After some trial and error on Twitter, where video content rarely favors the restful, Wong and Thompson realized that the sort of material they sought resided on YouTube. Perhaps it’s been reflexively dumped by users with no particular passion for what they’ve recorded. Or the account is a new one, its owner just beginning to figure out how to post content.

The videos on any given Astronaut journey earn their place by virtue of generic, camera-assigned file names (IMG 0034, MOV 0005, DSC 0165…), zero views, and an upload within the last week.

The overall effect is one of mesmerizing, unremarkable life going on whether it’s observed or not.

Children perform in their living room

A woman assembles a bride’s bouquet

A kitten bats a toy

A pre-fab home is moved into place

The vision is heartwarmingly global.

Astronaut is anti-star, but there are some frequent sightings, owing to the number of nameless inconsequential videos any one user uploads.

This week a Vietnamese fashionista, a karaoke space in Argentina, and a boxing ring in Montreal make multiple appearances, as do some very tired looking teachers.

The effect is most soothing when you allow it to wash over you unimpeded, but there is a red button below the frame, if you feel compelled to linger within a certain scene.

(You can also click on whatever passes for the video’s title in the upper left corner to open it on YouTube, from whence you might be able to suss out a bit more information.)

A very young Super Mario fan has apparently colonized a parent’s account for his narrated gaming videos.

Halfway around the world, a formally dressed man sits behind a desk prior to his first-ever upload.

Some gifted dancers fail to rotate prior to uploading.

A recently acquired night vision wildlife cam has already captured a number of coyotes.

And everyone who comes through the door of a Chinese household adores the happy baby within.

It’s unclear if the algorithm will alight on any cell phone footage documenting the shocking scenes at recent protests sparked by the death of George Floyd. Perhaps not, given the urgency to share such videos, titling them to clue viewers in to the what, who, where, when, and why.

For now Astronaut appears to be the same floaty trip Jake Swearingen described in a 2017 article for New York Magazine:

The internet is a place that often rewards the shocking, the sad, the rage-inducing — or the nakedly ambitious and attention-seeking. A morning of watching Astronaut.io is an antidote to all that.

Begin your explorations with Astronaut here.

h/t to reader Tom Hedrick

Related Content:

A Playlist of Songs to Get You Through Hard Times: Stream 20 Tracks from the Alan Lomax Collection

Soothing, Uplifting Resources for Parents & Caregivers Stressed by the COVID-19 Crisis

Ayun Halliday is an author, illustrator, theater maker and Chief Primatologist of the East Village Inky zine. Every day since March 15, she has uploaded a set of 10 micrhvisions of socially distanced New York City. Follow her @AyunHalliday.