Mark Twain was, in the estimation of many, the United States of America’s first truly homegrown man of letters. And in keeping with what would be recognized as the can-do American spirit, he couldn’t resist putting himself forth now and again as a man of science — or, more practically, a man of technology. Here on Open Culture, we’ve previously featured his patented inventions (including a better bra strap), the typewriter of which he made pioneering use to write a book, and even the internet-predicting story he wrote in 1898. Given Twain’s inclinations, his fame, and the time in which he lived, it may come as no surprise to hear that he also struck up a friendship with the much-romanticized inventor Nikola Tesla.

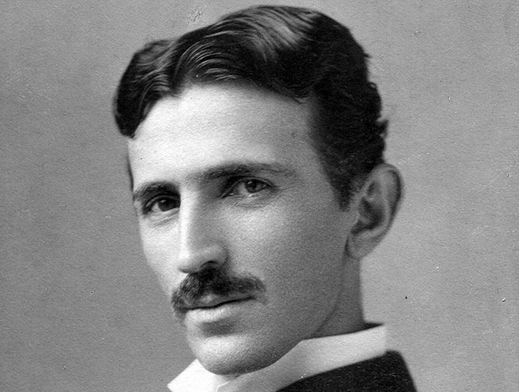

As it happens, Tesla had become a fan of Twain’s long before they met, having found solace in the American writer’s books provided during a long, near-fatal stretch of childhood illness. He credits his recovery with the laughter that reading material provided him, and one imagines seeing life in the U.S. through Twain’s eyes played some part in his eventual emigration there.

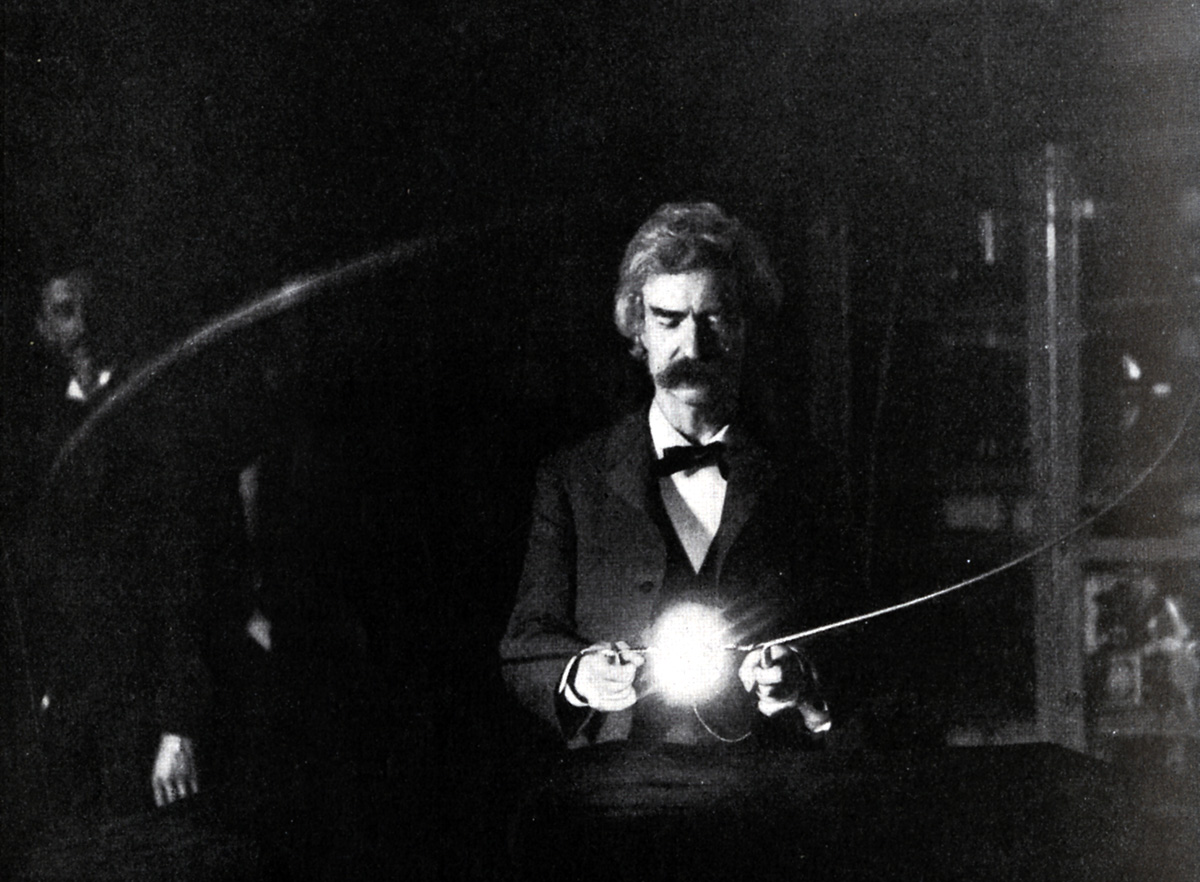

By that point, Twain himself was living in Europe, though his frequent visits to New York meant that he could drop by Tesla’s lab and see how his latest experiments with electricity were going. It was there, in 1894, that the two men took the photograph above, in which Twain holds a vacuum lamp engineered by Tesla and powered (out of frame) by the electromagnetic coil that bears his name.

As Ian Harvey writes at The Vintage News, “Tesla was a scientist whose work largely revolved around electricity; at that time, making your living as a scientist and inventor could often mean having to be somewhat of a showman,” a pressure Twain understood. History has recorded that Tesla provided Twain with — in addition to an electricity-based constipation cure that worked rather too well — advice against putting his money into an uncompetitive automatic typesetting machine that, unfortunately, went unheeded. The onetime riverboat captain went on to make an even more unsound investment in a powder called Plasmon, which promised to end world hunger. Perhaps Tesla’s spiritual descendants are to be found in today’s Silicon Valley, inventing the future; Mark Twain’s certainly are, underwriting any number of far-fetched schemes, if with far less of a sense of humor.

Related Content:

Mark Twain Plays With Electricity in Nikola Tesla’s Lab (Photo, 1894)

Mark Twain Wrote the First Book Ever Written With a Typewriter

Mark Twain’s Patented Inventions for Bra Straps and Other Everyday Items

When David Bowie Became Nikola Tesla: Watch His Electric Performance in The Prestige (2006)

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities and the book The Stateless City: a Walk through 21st-Century Los Angeles. Follow him on the social network formerly known as Twitter at @colinmarshall.